Building Decentralized AI (DeAI): Understanding the Modular AI Tech Stack

Decentralized and modular AI tech stack empowers developers to build, scale, and monetize AI agents. Learn how the tech stack works.

Increasingly, artificial intelligence (AI) is at the core of several modern products and user-facing solutions. Plenty of companies and businesses are building or embedding AI into their systems or product portfolio.

Despite those efforts, the current AI landscape reveals fundamental limitations: lack of specialization, risks of bias and hallucination, and blurry data privacy lines.

The majority of today’s most popular AI solutions are developed and controlled by a handful of big tech companies.

Waiting for these companies to address these issues means granting them unchecked control over our digital lives while deepening our dependence on them. They can see what data users submit, control how the data is used and processed, and determine what outputs we receive—effectively shaping our digital reality.

In this blog, we will see how decentralization and open-source development help fix these issues with a modular redesign of the AI tech stack. We will explain the components of the tech stack, how they work in unison with each other, and how Gaia helps bring all of them together to build decentralized AI.

Understanding the Need for Modularity in AI Development

Today’s dominant players, already flush with access to user data, compute power, and other resources, have very little incentive to fix the limitations mentioned above.

Why?

Centralization is always sweeter for the big players since they can control the power dynamics — make the entry barriers higher, reduce or buy out competition, influence policies, monetize at will, and whatnot.

To counter this, decentralization and open-source theses are coming together to build a more equitable, inclusive, and transparent alternative — decentralized AI.

Decentralization in AI offers five key benefits:

- Users and organizations alike can maintain control over their proprietary and sensitive data while still using AI capabilities.

- Developers are free to experiment with different combinations of models, domain knowledge, user data, and compute.

- Open-source components allow for community auditing and improvement of AI solutions.

- Modularity allows AI systems to be customized and fine-tuned for specific industries or use cases, leading to more effective and targeted outcomes.

- Decentralization empowers everyone to permissionlessly build, access, modify, and even monetize specialized AI solutions.

Gaia is a front-runner in decentralized AI. We are reimagining AI as a modular, composable stack to allow anyone to build, customize, and scale AI agents.

To take advantage of modularity and build an effective and personalized AI solution, one needs a deeper understanding of the decentralized AI tech stack.

In the next section, we will go over the decentralized AI tech stack, its moving parts, the idea of composability, and how Gaia facilitates all of these.

Unbundling The Tech Stack of Decentralized AI

Decentralized AI is not just about replacing centralized models with open-source alternatives. It requires a complete reimagining of how AI systems are built, deployed, and managed from scratch.

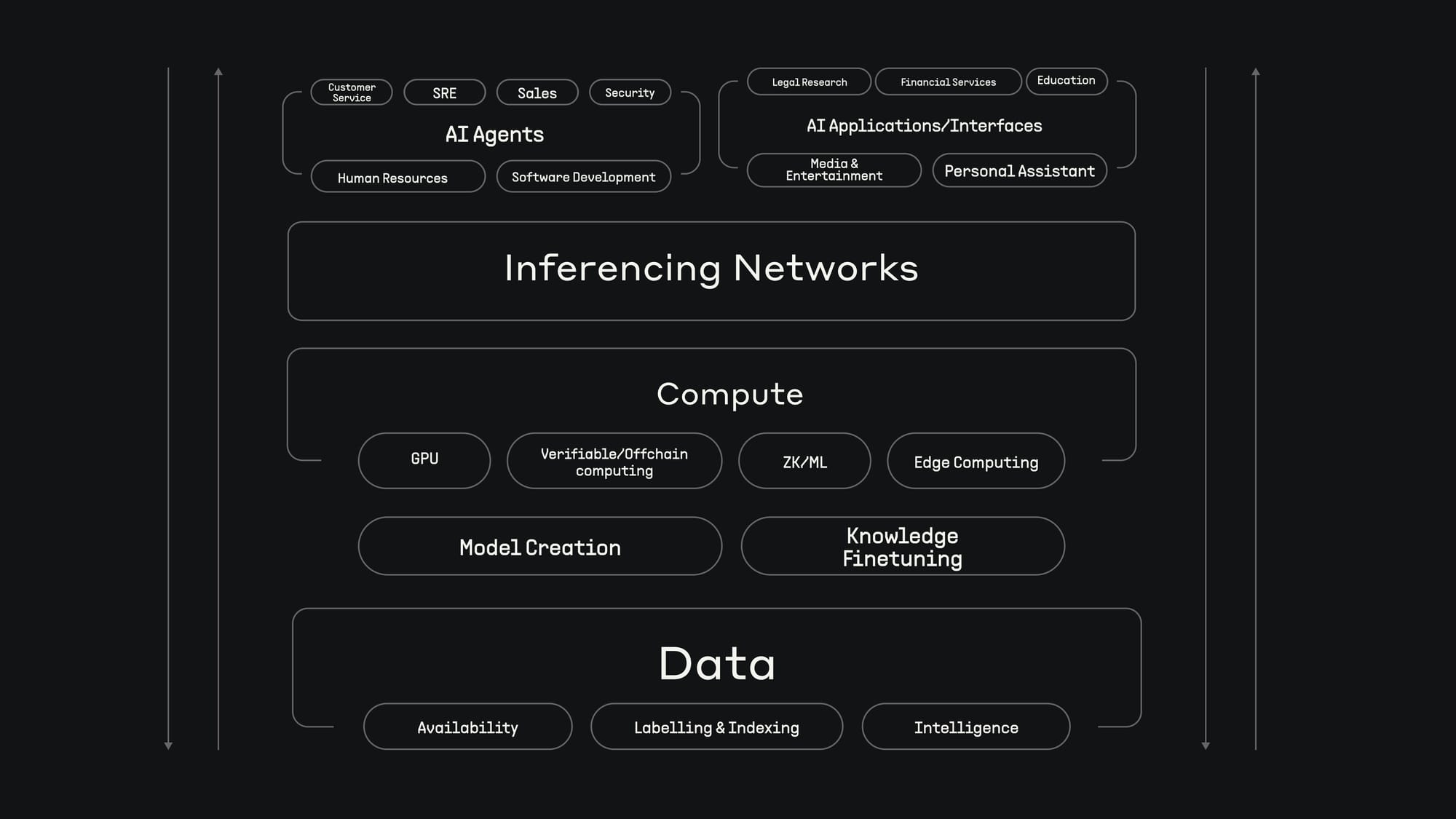

Here are three foundational components that form the tech stack of decentralized AI:

- Technical architecture: The foundation of decentralized AI, responsible for the compute, data, and AI model layers that make up the core infrastructure.

- Applications & interfaces: The interface where developers and end-users interact with AI agents and tools.

- Developer orchestration and inferencing: The central orchestrator that manages the flow of information, compute, and coordination in an AI agent.

Let’s now dive deeper into each component of the decentralized AI tech stack.

Technical Architecture — The Workhorses of Decentralized AI

The technical architecture of decentralized AI consists of four critical layers that work together to provide a flexible and modular AI development framework.

Decentralized compute layer

This layer distributes computational tasks across a network of GPU pools, edge devices, and privacy-preserving compute protocols. Instead of depending on centralized data centers, decentralized compute systems allocate workloads dynamically across a distributed network.

Key components include:

- GPU pools for intensive model training and inference.

- Edge devices for local processing and reduced latency.

- Verifiable computation using ZK-SNARKs ensures computation integrity while preserving privacy.

Gaia orchestrates these compute resources, ensuring tasks are balanced across the network and executed with verifiable accuracy.

More importantly, Gaia has teamed up with Jiritsu, a trustless operating system built on zkMPC (Zero-Knowledge Multi-Party Computation). In this partnership, Gaia is super excited about building custom AI agents with verifiable computed through Jiritsu’s zkMPC.

To learn more about the partnership, read this: GaiaNet Collaborates with Jiritsu to Advance Decentralized AI Operations

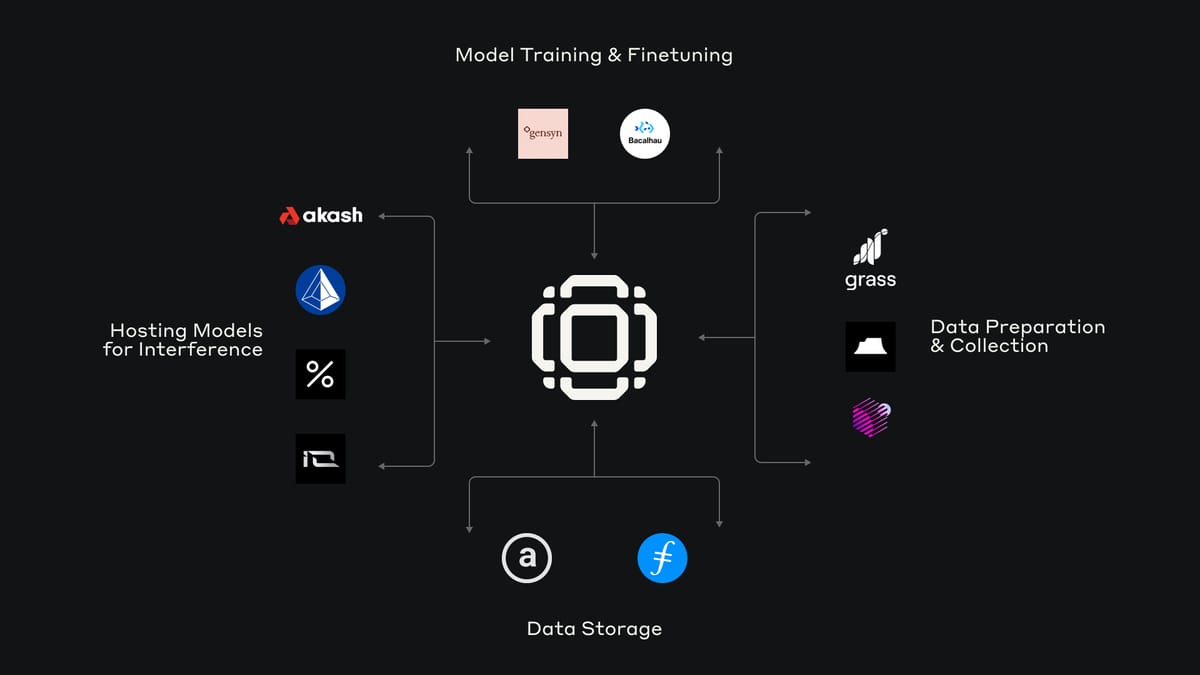

Data Layer

The data layer is responsible for managing data storage, availability, and transformation across decentralized networks. This infrastructure is essential for supplying the high-quality datasets needed to train and fine-tune AI models.

The decentralized data layer provides:

- Distributed storage across networks like IPFS, Arweave, and EigenDA, ensuring data remains available and stored redundantly.

- Community-driven data labeling, indexing, and validation mechanism for more organized and easy-to-access data.

- Oracle networks that feed real-time data while maintaining accuracy.

Gaia ensures secure, efficient access to these decentralized data sources, enabling the continuous flow of data required for AI development and refinement.

Recently, Gaia has partnered with Vana, a distributed network for user-owned data, to build a more transparent, equitable, and user-centric AI ecosystem.

Find more about the partnership here: Gaia x Vana: Partnership Announcement

AI Model Layer

The AI model layer focuses on the creation, training, and deployment of AI models in a decentralized environment. This layer allows for modularity, enabling seamless customization and scaling of models, and making specialized AI possible through:

- Purpose-built language models (LLMs) and specialized models optimized for specific industries, such as finance, chemistry, or healthcare.

- Decentralized training frameworks allow AI models to be created and updated using distributed compute resources.

- Fine-tuning tools enable models to learn and adapt continuously from decentralized data streams, ensuring that they remain accurate and updated.

Inference and Deployment Layer

This layer handles the real-time deployment and serving of AI models, leveraging a decentralized network of inference nodes to distribute and process requests efficiently.

The inference and deployment layer supercharges AI by:

- Network of inference nodes providing localized model serving.

- Load balancing to optimize performance and resource usage.

- Edge deployment options for latency-sensitive applications.

We just learnt a string of components that form decentralized AI, but how do they coordinate and work together to bring AI agents or solutions to life?

Gaia: The Orchestration Layer That Brings It All Together

Gaia is an open-source framework that ensures the smooth operation and integration of different components—data, compute resources, AI models, and applications—while providing the tools and governance structures necessary for developers to build robust, specialized AI solutions.

Gaia’s open-source framework allows developers to deploy AI agents based on specific knowledge bases easily.

This orchestration layer is what brings composability to life, allowing the different parts of the AI stack to function together seamlessly.

There are five primary functions of Gaia in the decentralized AI tech stack:

- Orchestration

Gaia coordinates the entire decentralized AI stack by managing task flows, computing resources, and communicating between components. It balances workloads across nodes, ensuring optimal resource allocation and preventing bottlenecks.

- Inferencing

The inferencing layer executes AI models in real-world applications. It distributes processing across nodes to deliver rapid responses for tasks like image recognition, language translation, or customer service. By decentralizing inference, Gaia enables AI services to scale while maintaining consistent performance.

- Node Management

Gaia deploys and manages nodes – the network's building blocks – across edge devices and cloud infrastructure. These nodes host AI agents and run inference tasks. The distributed architecture eliminates single points of failure and enables flexible scaling based on demand.

The geographical distribution of Gaia nodes, as shown below, echoes the commitment to decentralization.

- Incentive Alignment

Gaia has built-in token mechanisms that align the interests of all participants. Node operators earn rewards for providing compute, developers for contributing code, and data providers for sharing datasets. This economic layer ensures sustainable network growth and incentivizes quality contributions.

- Governance

A DAO-based governance framework enables transparent decision-making and resource management. Stakeholders can propose and vote on protocol changes, ensuring the network evolves to meet community needs while maintaining operational integrity.

Now, all of these components are under the hood and invisible to the end user. The next section covers the user-facing layer of applications and interfaces.

AI Applications & Interfaces Layer — Where AI Meets Users

The AI Applications & Interfaces Layer is where decentralized AI solutions come to life, connecting the underlying technology with end-users and developers. It serves as the gateway through which AI-powered tools and agents interact with the real world, providing tangible benefits across a wide range of industries and use cases.

Some examples of applications within the applications and interfaces layer are:

- Customer service agents: AI agents that handle customer inquiries autonomously.

- Financial analytics tools: AI agents trained to analyze financial data, run real-time market simulations, or provide insights to aid trading or analysis.

- Software development assistant: AI coding assistant that helps developers write, debug, and optimize code.

For applications and interfaces, Gaia offers two major solutions to help developers and users use DeAI and Gaia agents easily.

- Gaia Domains

Gaia provides a key piece of visibility to the end application using Gaia domains. Technically, these domains are similar nodes grouped into trusted clusters, making decentralized AI services discoverable and reliable.

Each domain is managed and verified by its domain operator, who ensures that all nodes within the domain meet specific quality standards and function cohesively.

The key benefits of Gaia domains are:

- Quality assurance: Domain operators validate nodes to ensure they meet performance and reliability standards

- Load balancing: Requests are distributed across domain nodes for optimal performance

- Specialized services: Users can easily find AI agents tailored to specific industries or use cases

- Economic alignment: Each operator is accountable to the domain’s users, and if nodes fail to deliver reliable or accurate results, the operator risks losing both trust and economic rewards.

- Trust layer: Domain operators stake their reputation by ensuring that the nodes within their domain perform as expected.

Simply put, Gaia Domains provide users with the visibility needed to understand and trust the AI services they interact with.

- Gaia DeAI Marketplace

Gaia marketplace is a market structure for trading AI assets built from individualized knowledge bases and AI components. Think of it as a "YouTube for knowledge and skills" where experts can monetize their specialized knowledge and AI capabilities.

Some components that are most likely to be made available for trade are:

- Fine-tuned AI models

- Knowledge bases and datasets

- Function-calling plugins

- Custom prompts and workflows

Why does the Gaia Marketplace matter?

- AI developers can access high-quality, ready-made components to build their agents efficiently.

- Developers and contributors are rewarded with Gaia tokens, empowering creators to monetize their intellectual property.

- Enterprises and businesses can build company-specific AI agents without massive infra investments.

Building Decentralized AI Made Simple by Gaia

Decentralized AI, as pioneered by Gaia, represents a fundamental shift in how AI will evolve and be utilized. We will be moving away from AI controlled by a few tech giants towards allowing anyone and everyone to contribute and benefit from AI.

This democratization of AI development means more purpose-built solutions instead of generic models. Specialists and domain experts contributing to these specialized AI agents and solutions are going to be fairly compensated.

At Gaia, we're building the foundational infrastructure that enables all of the above and makes decentralized AI accessible and practical.

Whether you're a developer looking to build custom AI agents, an enterprise seeking secure AI solutions, or a domain expert wanting to monetize your knowledge, Gaia provides the tools and infrastructure you need.

Here are some resources to help you get started with Gaia:

Gaia User Guide: https://docs.gaianet.ai/category/gaianet-user-guide

Chat UI: https://knowledge2.gaianet.network/chatbot-ui/index.html

Node installation: https://github.com/GaiaNet-AI/gaianet-node

How to install a Gaia Node:

- https://drive.google.com/file/d/1j0rndzozYWX4y5ftY1XVgSDNXxvhgXcw/view

- https://youtu.be/b2qlaKqyKoE?si=WZdEU-mvJZayW03I

Demo videos: